- ImageCLEF 2025

- LifeCLEF 2025

- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

BirdCLEF 2020

Motivation

Monitoring birds by sound is important for many environmental and scientific purposes. Birds are difficult to photograph and sound offers better possibilities for inventory coverage. A number of participatory science projects have focused on recording a very large number of bird sounds, making it possible to recognize most species by their sound and to train deep learning models to automate this process. It was shown in previous editions of BirdCLEF that systems for identifying birds from mono-directional recordings are now performing very well and several mobile applications implementing this are emerging today. However, there is also interest in identifying birds from omnidirectional or binaural recordings. This would enable more passive monitoring scenarios like networks of static recorders that continuously capture the surrounding sound environment. The advantage of this type of approach is that it introduces less sampling bias than the opportunistic observations of citizen scientists. However, recognizing birds in such content is much more difficult due to the high vocal activity with signal overlap (e.g. during the dawn chorus) and high levels of ambient noise.

Data collection

The training set used for the challenge will be a version of the 2019 training set enriched by new contributions from the Xeno-canto network and a geographic extension. It will contain approximately 80K recordings covering between 1500 and 2000 species from North, Central and South America, as well as Europe. This will be the largest bioacoustic dataset used in the literature. For the test set, three sources of soundscapes will be used: (i) 100+ hours of manually annotated soundscapes recorded using 30 field recorders between January and June of 2017 in Ithaca, NY, USA by the Cornell Lab of Ornithology , (ii) 10+ hours of fully annotated dawn chorus soundscapes recorded using solar-powered field recorders between January and June 2018 near Frankfurt by OekoFor \footnote{}, (iii) 2 hours acquired at high sampling rate (250 kHz) by binaural antenna in Côte d'Azur, France.

Task description

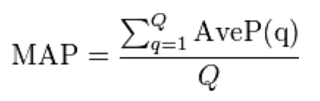

Two scenarios will be evaluated: (i) the recognition of all specimens singing in a long sequence (up to one hour) of raw soundscapes that can contain tens of birds singing simultaneously, and (ii) chorus source separation in complex soundscapes that were recorded in stereo at very high sampling rate (250 kHz SR). For the first scenario, participants will be asked to provide time intervals of recognized singing birds. Participants will be allowed to use any of the provided metadata complementary to the audio content (wav format, 44.1 kHz, 48 kHz, or 96 kHz sampling rate). The task is focused on developing real-world applicable solutions and therefore requires participants to submit single models trained on none other than the mono-species recordings provided as training data. For the second task on stereophonic recordings, the goal will be to determine the species singing in chorus simultaneously during a time interval. In contrast to task one, the challengers are invited to run automatic source separation before or jointly to the bird species classification, taking advantage of the multi-channel recordings. Participants will be allowed to use any other data than the provided recordings, but will have to provide the scripts to check that their solution is fully automatic. For both tasks, the evaluation measure will be the classification mean Average Precision computed as:

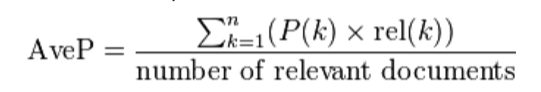

where Q is the number of test audio files and AveP(q) for a given test file q is computed as:

where k is the rank in the sequence of returned species, n is the total number of returned species, P(k) is the precision at cut-off k in the list and rel(k) is an indicator function equaling 1 if the item at rank k is a relevant species (i.e. one of the species in the ground truth).

How to participate ?

See registration instructions here. Fast link to the BirdCLEF challenge on AICrowd: BirdCLEF 2020

Reward

The winner of each of the four LifeCLEF 2020 challenges will be offered a cloud credit grants of 5k USD as part of Microsoft's AI for earth program.

Results

The overview paper presenting the results of the challenge is available here (ceur-ws proceeedings)