- ImageCLEF 2025

- LifeCLEF 2025

- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

ImageCLEFmed Caption

Welcome to the 9th edition of the Caption Task!

Motivation

Interpreting and summarizing the insights gained from medical images such as radiology output is a time-consuming task that involves highly trained experts and often represents a bottleneck in clinical diagnosis pipelines.

Consequently, there is a considerable need for automatic methods that can approximate this mapping from visual information to condensed textual descriptions. The more image characteristics are known, the more structured are the radiology scans and hence, the more efficient are the radiologists regarding interpretation. We work on the basis of a large-scale collection of figures from open access biomedical journal articles (PubMed Central). All images in the training data are accompanied by UMLS concepts extracted from the original image caption.

Lessons learned:

- In the first and second editions of this task, held at ImageCLEF 2017 and ImageCLEF 2018, participants noted a broad variety of content and situation among training images. In 2019, the training data was reduced solely to radiology images, with ImageCLEF 2020 adding additional imaging modality information, for pre-processing purposes and multi-modal approaches.

- The focus in ImageCLEF 2021 lay in using real radiology images annotated by medical doctors. This step aimed at increasing the medical context relevance of the UMLS concepts, but more images of such high quality are difficult to acquire.

- As uncertainty regarding additional source was noted, we will clearly separate systems using exclusively the official training data from those that incorporate additional sources of evidence

- For ImageCLEF 2022, an extended version of the ImageCLEF 2020 dataset was used. For the caption prediction subtask, a number of different additional evaluations metrics were introduced with the goal of replacing the primary evaluation metric in future iterations of the task.

- For ImageCLEF 2023, several issues with the dataset (large number of concepts, lemmatization errors, duplicate captions) were tackled and based on experiments in the previous year, BERTScore was used as the primary evaluation metric for the caption prediction subtask.

News

- 24.10.2024: website goes live

- 15.12.2024: registration opens

- 01.03.2025: development dataset released

Preliminary Schedule

- 24.10.2024: website goes live

- 15.12.2024: registration opens

- 01.03.2025: development dataset released

- 15.04.2025: test dataset released

- 13.05.2025: run submission phase ended

- 17.05.2025: results published

- 31.05.2025: submission of participant papers [CEUR-WS]

- 21.06.2025: notification of acceptance

Task Description

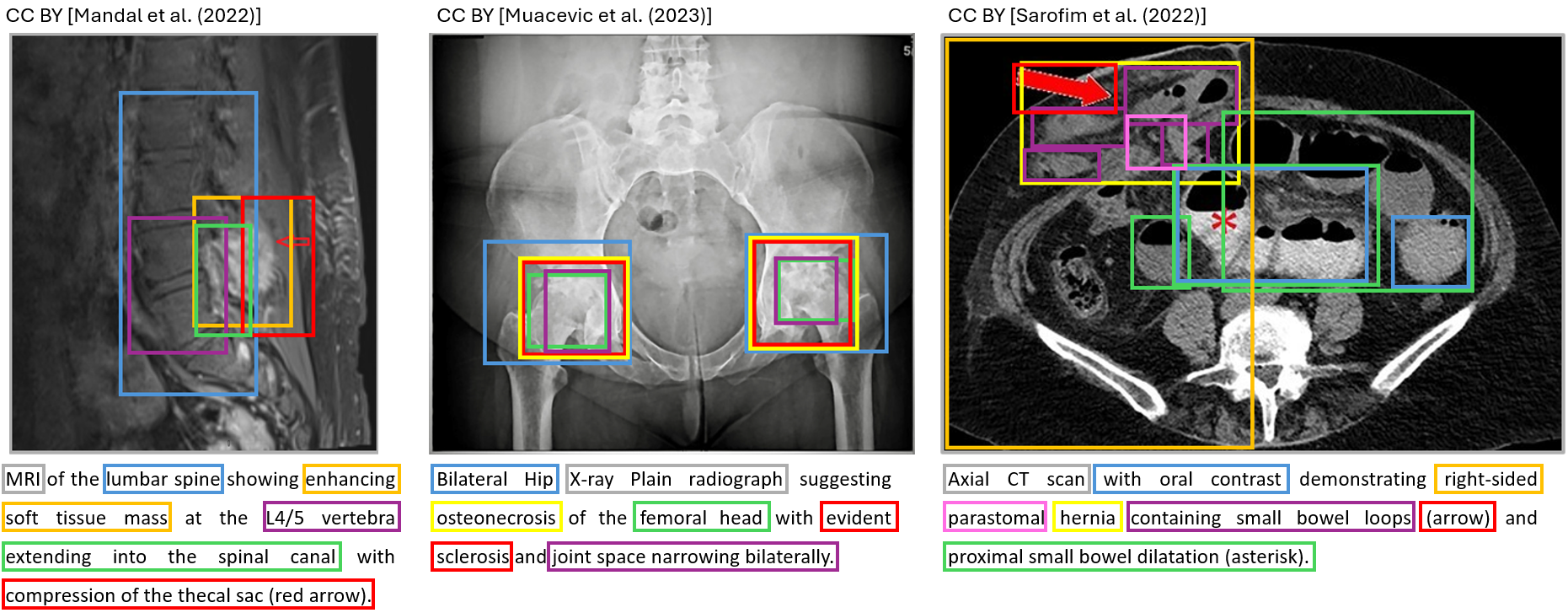

For captioning, participants will be requested to develop solutions for automatically identifying individual components from which captions are composed in Radiology Objects in COntext version 2[2] images.

ImageCLEFmedical Caption 2025 consists of two substaks:

- Concept Detection Task

- Caption Prediction Task

Concept Detection Task

The first step to automatic image captioning and scene understanding is identifying the presence and location of relevant concepts in a large corpus of medical images. Based on the visual image content, this subtask provides the building blocks for the scene understanding step by identifying the individual components from which captions are composed. The concepts can be further applied for context-based image and information retrieval purposes.

Evaluation is conducted in terms of set coverage metrics such as precision, recall, and combinations thereof.

Caption Prediction Task

On the basis of the concept vocabulary detected in the first subtask as well as the visual information of their interaction in the image, participating systems are tasked with composing coherent captions for the entirety of an image. In this step, rather than the mere coverage of visual concepts, detecting the interplay of visible elements is crucial for strong performance.

This year, we will use BERTScore as the primary evaluation metric and ROUGE as the secondary evaluation metric for the caption prediction subtask. Other metrics such as MedBERTScore, MedBLEURT, and BLEU will also be published.

Explainability Task

In addition, we ask participants to provide explanations for the captions of a small subset (will be released with the test dataset) of images. We encourage people to be creative. There are no technical limitations to this task. The explanations will be manually evaluated by a radiologist for interpretability, relevance, and creativity. Examples of how such an explanation might look like are provided as follows:

Data

The data for the caption task will contain curated images from the medical literature including their captions and associated UMLS terms that are manually controlled as metadata. A more diverse data set will be made available to foster more complex approaches.

For questions regarding the dataset please use the challenge website forum or contact hendrik.damm@fh-dortmund.de.

For the development dataset, Radiology Objects in COntext Version 2 (ROCOv2) [2], an updated and extended version of the Radiology Objects in COntext (ROCO) dataset [1], is used for both subtasks. As in previous editions, the dataset originates from biomedical articles of the PMC OpenAccess subset, with the test set comprising a previously unseen set of images.

Training Set: Consists of 80091 radiology images

Validation Set: Consists of 17277 radiology images

Test Set: Consists of 19267 radiology images

Concept Detection Task

The concepts were generated using a reduced subset of the UMLS 2022 AB release. To improve the feasibility of recognizing concepts from the images, concepts were filtered based on their semantic type. Concepts with low frequency were also removed, based on suggestions from previous years.

Caption Prediction Task

For this task each caption is pre-processed in the following way:

- removal of links from the captions

Evaluation methodology

The source code of the evaluation script is available on Github (https://github.com/taubsity/clef-caption-evaluation).

For questions regarding the evaluation scripts please use the challenge website forum or contact tabea.pakull@uk-essen.de.

Concept Detection

Evaluation is conducted in terms of F1 scores between system predicted and ground truth concepts, using the following methodology and parameters:

- The default implementation of the Python scikit-learn (v0.17.1-2) F1 scoring method is used. It is documented

here. - A Python (3.x) script loads the candidate run file, as well as the ground truth (GT) file, and processes each candidate-GT concept sets.

- For each candidate-GT concept set, the y_pred and y_true arrays are generated. They are binary arrays indicating for each concept contained in both candidate and GT set if it is present (1) or not (0).

- The F1 score is then calculated. The default 'binary' averaging method is used.

- All F1 scores are summed and averaged over the number of elements in the test set, giving the final score.

- The primary score considers any concept. The secondary score filters both predicted and GT concepts to the set of manually annotated concepts before repeating the same F1 scoring steps.

The ground truth for the test set was generated based on the same reduced subset of the UMLS 2022 AB release which was used for the training data (see above for more details).

Caption Prediction

This year, ranking of participants is based on an average score over all used metrics. In total 6 metrics are computed that fall into the aspects of relevance and factuality.

Relevance

In order to evaluate the relevance aspect of generated captions the following metrics are used:

- Image and Caption Similarity

- BERT-Score (Recall) with inverse document frequency (idf) scores computed from the test corpus for importance weighting

- Recall-Oriented Understudy for Gisting Evaluation (ROUGE) for overlap of unigrams (ROUGE-1) (F-measure)

- Bilingual Evaluation Understudy with Representations from Transformers (BLEURT)

Image and Caption Similarity is computed using the following methodology:

Using a medical imaging embedding model for calculating embeddings of the caption and the image and calculating similarity of these embeddings.

Note: For the following relevance metrics (BERT-Score, ROUGE and BLEURT), each caption is pre-processed in the same way:

- The caption is converted to lower-case.

- Replace numbers with the token 'number'.

- Remove punctuation.

Note that the captions are always considered as a single sentence, even if it actually contains several sentences.

BERTScore is calculated using the following methodology and parameters:

The native Python implementation of BERTScore is used. This scoring method is based on the paper

"BERTScore: Evaluating Text Generation with BERT" and aims to measure the quality of generated text by comparing it to a reference. We use Recall BERTScore with inverse document frequency (idf) scores computed from the test corpus for importance weighting as this setting correlates the most with human ratings for the image captioning task reported in the BERTScore paper.

To calculate BERTScore, we use the microsoft/deberta-xlarge-mnli model, which can be found on the Hugging Face Model Hub. The model is pretrained on a large corpus of text and fine-tuned for natural language inference tasks. It can be used to compute contextualized word embeddings, which are essential for BERTScore calculation.

To compute the final BERTScore, we first calculate the individual score (Recall idf) for each caption. The BERTScore is then averaged across all captions to give the final score.

The ROUGE score is calculated using the following methodology and parameters:

The native python implementation of ROUGE scoring method is used. It is designed to replicate results from the original perl package that was introduced in the paper

"ROUGE: A Package for Automatic Evaluation of Summaries".

Specifically, we calculate the ROUGE-1 (F-measure) score, which measures the number of matching unigrams between the model-generated text and a reference. The final score is the average ROUGE-1 over all captions.

For calculation of BLEURT the following methodology and parameters are used:

The native Python implementation of BLEURT is used. This scoring method is based on the paper

"BLEURT: Learning Robust Metrics for Text Generation". The aim of BLEURT is to provide an evaluation metric for text generation by learning from human judgments using BERT-based representations. In this evaluation, the recommended BLEURT-20 checkpoint is employed.

Factuality

In order to evaluate the factuality aspect of generated captions the following metrics are used:

- Unified Medical Language System (UMLS) Concept F1

- AlignScore

We calculate the UMLS F1 using the following methodology:

We use MedCAT to get the medical entities (UMLS concepts) of the caption and the predicted caption. We only match entities with the semantic types that are used to calculate MEDCON as described in the paper

"Aci-bench: a Novel Ambient Clinical Intelligence Dataset for Benchmarking Automatic Visit Note Generation".

For calculating MEDCON the following methodology and parameters are used:

The native Python implementation of MEDCON is used. As described in the paper

"Aci-bench: a Novel Ambient Clinical Intelligence Dataset for Benchmarking Automatic Visit Note Generation" it evaluates the clinical accuracy and consistency of the Unified Medical Language System (UMLS) concept sets in generated and reference texts.

For detecting the UMLS concepts in both texts the QuickUMLS package is used and then the F1 score is calculated.

The AlignScore is calculated using the following methodology and parameters:

The native Python implementation of the AlignScore is used. It implements the metric based on RoBERTa introduced in the paper

"AlignScore: Evaluating Factual Consistency with A Unified Alignment Function". The

checkpoints are available on the Huggingface Model Hub. The AlignScore is designed to evaluate factual consistency in text generation by assessing the alignment of information between two pieces of text. The model calculates a score by splitting long contexts into manageable chunks and matching each claim sentence with the most supportive context chunk. The final AlignScore is the average alignment score across all claim sentences.

Participant registration

Please refer to the general ImageCLEF registration instructions

Results

TBA

CEUR Working Notes

TBA

Citations

TBA

Contact

Organizers:

- Hendrik Damm <hendrik.damm(at)fh-dortmund.de>, University of Applied Sciences and Arts Dortmund, Germany

- Johannes Rückert, University of Applied Sciences and Arts Dortmund, Germany

- Asma Ben Abacha <abenabacha(at)microsoft.com>, Microsoft, USA

- Alba García Seco de Herrera <alba.garcia(at)essex.ac.uk>,University of Essex, UK

- Christoph M. Friedrich <christoph.friedrich(at)fh-dortmund.de>, University of Applied Sciences and Arts Dortmund, Germany

- Henning Müller <henning.mueller(at)hevs.ch>, University of Applied Sciences Western Switzerland, Sierre, Switzerland

- Louise Bloch <louise.bloch(at)fh-dortmund.de>, University of Applied Sciences and Arts Dortmund, Germany

- Raphael Brüngel <raphael.bruengel(at)fh-dortmund.de>, University of Applied Sciences and Arts Dortmund, Germany

- Ahmad Idrissi-Yaghir <ahmad.idrissi-yaghir(a)fh-dortmund.de>, University of Applied Sciences and Arts Dortmund, Germany

- Henning Schäfer <henning.schaefer(at)uk-essen.de>, Institute for Transfusion Medicine, University Hospital Essen, Germany

- Cynthia S. Schmidt, Institute for Artificial Intelligence in Medicine (IKIM), University Hospital Essen

- Tabea M. G. Pakull, Institute for Transfusion Medicine, University Hospital Essen, Germany

- Benjamin Bracke, University of Applied Sciences and Arts Dortmund, Germany

- Obioma Pelka, Institute for Artificial Intelligence in Medicine, Germany

- Bahadir, Eryilmaz, Institute for Artificial Intelligence in Medicine, Germany

- Helmut Becker, Institute for Artificial Intelligence in Medicine, Germany

Acknowledgments

[1] Pelka, O., Koitka, S., Rückert, J., Nensa, F., & Friedrich, C. M. (2018). Radiology Objects in COntext (ROCO): A Multimodal Image Dataset. In Intravascular Imaging and Computer Assisted Stenting and Large-Scale Annotation of Biomedical Data and Expert Label Synthesis (pp. 180–189). Springer International Publishing.

[2] Rückert, J., Bloch, L., Brüngel, R., Idrissi-Yaghir, A., Schäfer, H., Schmidt, C. S., Koitka, S., Pelka, O., Abacha, A. B., de Herrera, A. G. S., Müller, H., Horn, P. A., Nensa, F., & Friedrich, C. M. (2024). ROCOv2: Radiology objects in COntext version 2, an updated multimodal image dataset. https://doi.org/10.48550/ARXIV.2405.10004