- ImageCLEF 2025

- LifeCLEF 2025

- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

Robot Vision 2013

Welcome to the website of the 5th edition of the Robot Vision Challenge!

The fifth edition of the Robot Vision challenge follows four previous successful events. As for the previous editions, the challenge will address the problem of semantic place classification using visual and depth information. This time, the task also addresses the challenge of object recognition.

Mobile robot platform

used for data acquisition.

News

- 10/12/2012 - The task has been released.

- 28/01/2013 - Training information is now available => Download here

- 09/04/2013 - Test sequence is now available => Download here

- 15/04/2013 - Submission system is now open => Registration system

- 17/04/2013 - A validation is now available => Download here

- 01/05/2013 - Submission deadline has been extended 1 week => New deadline: May 8th

- 31/05/2013 - Results release: The results for the task has been released. The winner is the MIAR ICT group.

You can follow Robot Vision task on Facebook

Organisers

- Barbara Caputo, Research Institute, Martigny, Switzerland, bcaputo@idiap.ch

- Jesus Martinez Gomez, University of Castilla-La Mancha, Albacete, Spain, jesus.martinez@uclm.es

- Ismael Garcia Varea, University of Castilla-La Mancha, Albacete, Spain, Ismael.Garcia@uclm.es

- Miguel Cazorla, University of Alicante, Alicante, Spain, miguel@dccia.ua.es

Contact

For any doubt related to the task, please refer to Jesus Martinez Gomez using his email: jesus.martinez@uclm.es

Overview

The fifth edition of the RobotVision challenge will focus on the problem of multi-modal place classification and object recognition. Participants will be asked to classify functional areas on the basis of image sequences, captured by a perspective camera and a kinect mounted on a mobile robot within an office environment. Therefore, participants will have available visual (RGB) images and depth images generated from 3D cloud points. Participants will also be asked to list of objects that appear in the scene.

Training sequences will be labelled with semantic labels (corridor, kitchen, office) but also with the objects that are represented in them (fridge, chair, computer). The test sequence will be acquired within the same building and floor but there can be variations in the lighting conditions (very bright places or very dark ones) or the acquisition procedure (clockwise and counter clockwise). Taking that into account we highly encourage to the participants to make use of the depth information in order to extract relevant information in extreme lighting conditions. As a novelty for this year, the provided training sequences of images will be labelled with a set of objects (previously defined) that appears within these images. Therefore proper recognition of objects will produce higher score in the evaluation procedure.

Schedule

- 10/12/2012 - Release of the task.

- 28/01/2013 - Training data and task release.

- 09/04/2013 - Test data release.

- 15/04/2013 - Submission system open.

- 17/04/2013 - Validation data release.

- 08/05/2013 - Run Submission Deadline

- 31/05/2013 - Results Release

- 15/06/2013 - Working Notes Papers Submitted --> Instructions http://www.clef2013.org/index.php?page=Pages/instructions_for_authors.html

- 23-26/09/2013 - CLEF 2013 conference in Valencia (Spain)

Results

| RobotVision Challenge |

| Groups | |||||||||||||||||||||||||||||||||||

|

| Runs | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

The task

In this year edition only one task will be considered, where participants should be able to answer two questions:

- The first one is the typical question for semantic place classification, that is "where are you?" when presented with a test sequence imaging a room category seen during training.

- The second question is, “what objects are you seeing in that place?”. The set of recognizable objects are predefined according to the typical objects that can appear in the different imaged places in the provided sequences.

The data

The main novelty of this edition will be the information of the presence or lack of a set of predefined objects in the images. There are several sequences of visual and depth images. Visual images are stored using the .png format while depth images use the .pcd one (distance information + colour).

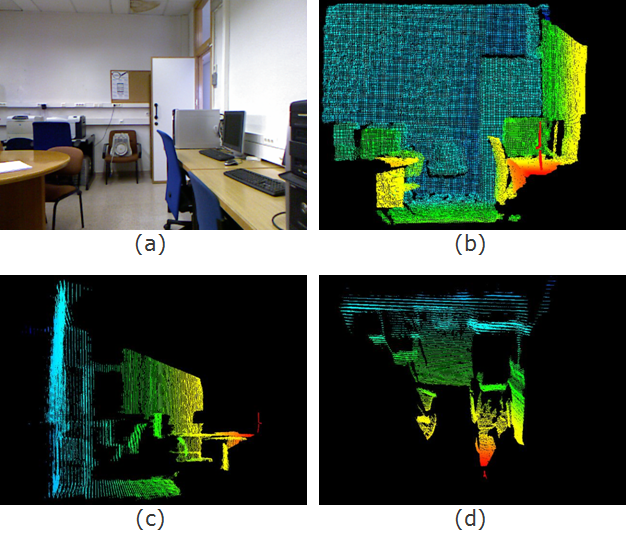

The following image shows an example of a visual and a depth image from the same scene.

Visual image (a) and front (b), left (c) and top (d) views from the .pcd image

Sequences

Two training sequences are provided at the task release. An additional (labelled) validation sequence will be provided in next weeks to allow participants to evaluate their proposals with a sequence similar to the test one. Finally, the unlabelled test sequence will provided.

- Training1

- Visual Images

- Depth Images: ASCII or Binary

- Locations and Objects

- Training2

- Visual Images

- Depth Images: ASCII or Binary

- Locations and Objects

- Validation

- Visual Images

- Depth Images: ASCII or Binary

- Locations and Objects

- Test

- Visual Images

- Depth Images ASCII or Binary

Rooms

These are all the rooms/categories that appear in the database:

- Corridor

- Hall

- ProfessorOffice

- StudentOffice

- TechnicalRoom

- Toilet

- Secretary

- VisioConferene

- Warehouse

- ElevatorArea

Sample images for all the room categories listed in the dataset

Objects

These are all the objects that can appear in any image of the database:

- Extinguisher

- Computer

- Chair

- Printer

- Urinal

- Screen

- Trash

- Fridge

Sample images for all the objects listed in the dataset

Performance Evaluation

For each frame in the test sequence, participants have to provide information related to the class/room category (1 multiclass problem) but also related to the presence/lack of all the objects listed in the dataset (8 binary problems). The number of times a specific object appears in a frame it is not relevant. The final score for a run will be the sum of all the scores obtained for the frames included in the test sequence.

The following rules are used when calculating the final score for a frame:

Class/Room Category

- The class/room category has been correctly classified: +1.0 points

- The class/room category has been wrongly classified: -0.5 points

- The class/room category has not benn classified: 0.0 points

Object

- For each correctly classified object whitin the frame: +0.125 points

- For each misclassified object whitin the frame: -0.125 points

- For each object that was not classified: 0.0 points

Real values for the frame (TechnicalRoom !Extinguisher Computer !Chair Printer !Urinal !Screen !Trash !Fridge)

| Class / Room Category | Extinguisher | Computer | Chair | Printer | Urinal | Screen | Trash | Fridge |

| TechnicalRoom | NO | YES | NO | YES | NO | NO | NO | NO |

| Class / Room Category | Extinguisher | Computer | Chair | Printer | Urinal | Screen | Trash | Fridge |

| TechnicalRoom | YES | NO | NO | NO | YES | |||

| 1.0 | 0.0 | 0.125 | 0.125 | -0.125 | 0.125 | 0.0 | -0.125 | 0.0 |

| Class / Room Category | Extinguisher | Computer | Chair | Printer | Urinal | Screen | Trash | Fridge |

| Unknown | NO | YES | NO | YES | NO | NO | NO | NO |

| 0.0 | 0.125 | 0.125 | 0.125 | 0.125 | 0.125 | 0.125 | 0.125 | 0.125 |

| Class / Room Category | Extinguisher | Computer | Chair | Printer | Urinal | Screen | Trash | Fridge |

| Corridor | YES | NO | NO | YES | NO | NO | NO | |

| -0.5 | -0.125 | -0.125 | 0.125 | 0.125 | 0.125 | 0.0 | 0.125 | 0.125 |

Performance Evaluation Script

A python script is provided for evaluating performance of the algorithms on the test/validation sequence. The script and some examples are available: Python (tested in v 3.3.0 ) is required in order to use the module or execute the script. Python is available for Unix/Linux, Windows, and Mac OSX and can be downloaded from http://www.python.org/download/. The knowledge of Python is not required in order to simply run the script; however, basic knowledge might be useful since it can also be integrated with other scripts as a module. A good quick guide to Python can be found at http://rgruet.free.fr/PQR26/PQR2.6.html. The archive contains four files:

- robotvision.py - the main Python script

- example.py - small example illustrating how to use robotvision.py as a module

- training1perfect.results - example of a file containing perfect results for the training 1 sequence

- example2perfect.results - example of a file containing perfect results for the training 2 sequence

- exampletrain1.results - example of a file containing fake results for the training 1 sequence. It should obtain the score 3048.875

- Corridor

- Hall

- ProfessorOffice

- StudentOffice

- TechnicalRoom

- Toilet

- Secretary

- VisioConferene

- Warehouse

- ElevatorArea

- Unknown - no result provided for the room category

- Extinguisher

- Computer

- Chair

- Printer

- Urinal

- Screen

- Trash

- Fridge

- Empty string- no result provided for the object

/========================================================\ | robotvision.py | |--------------------------------------------------------| | RobotVision@ImageCLEF'13 Performance Evaluation Script | | Author: Jesus Martinez-Gomez, Ismael Garcia-Varea | \========================================================/ Error: Incorrect command line arguments. Usage: robotvision.pyIn Linux, it is sufficient to make the robotvision.py executable (chmod +x ./robotvision.py) and then type ./robotvision.py in the console. In Windows, the .py extension is usually assigned to the Python interpreter and typing robotvision.py in the console (cmd) is sufficient to produce the note presented above. In order to obtain the final score for a given training sequence, run the script with the parameters described above e.g. as follows: robotvision.py exampletrain1.results training1Arguments: - Path to the results file. Each line in the file represents a classification result for a single image and should be formatted as follows: list of or <!object_i> - ID of the test sequence: 'training1' or'training2'

The command will produce the score for the results taken from the exampletrain1.results file obtained for the training1 sequence. The outcome should be as follows:

Selected Arguments:Each line in the results file should represent a classification result for a single image. Since each image can be uniquely identified by its frame number, each line should be formatted as follows: <frame_number> <area_label> list of <object_i> or < !object_i> As indicated above, <area_label> can be set to "Unknown" and the image will not contribute to the final score (+0.0 points). In a similar way, any presece/lack of an object can be avoided and the objecy will not contribute to the final score (+0.0 points).= exampletrain1.results = training1 Calculating the score... Done! =================== Final score: 3048.875 ===================

Useful information for participants

The organizers propose the use of several techniques for features extraction and cue integration. Thanks to these well documented techniques with open source available, participants can focus on the development of features while using the proposed method for cue integration or vice versa. In addition to feature extraction and integration, the organizers also provide useful information as the point cloud library.

Features generation

Visual images:

- Pyramid Histogram of Oriented Gradients (PHOG)

- Web page: Phog Page

- Article to refer: A. Bosch, A. Zisserman, and X. Munoz, “Representing shape with a spatial pyramid kernel,” in Proceedings of the 6th ACM international conference on Image and video retrieval. ACM, 2007, p. 408.

- Source code for download (Matlab): Phog Code

- Pyramid Histogram Of visual Words (PHOW)

- Article to refer: A. Bosch, A. Zisserman, and X. Munoz, “Representing shape with a spatial pyramid kernel,” in Proceedings of the 6th ACM international conference on Image and video retrieval. ACM, 2007, p. 408.

- Download ( VLFeat open source library): VLFeat Install Instructions

- Normal Aligned Radial Feature (NARF)

- Article to refer: Steder, B.; Rusu, R.B.; Konolige, K.; Burgard, W.; , "Point feature extraction on 3D range scans taking into account object boundaries," Robotics and Automation (ICRA), 2011 IEEE International Conference on , vol., no., pp.2601-2608, 9-13 May 2011

- Source code for download (C++): How to extract NARF Features from a range image

Cue integration

Learning the classifier

- Online-Batch Strongly Convex mUlti keRnel lEarning: OBSCURE

- Article to refer: Orabona, F.; Luo Jie; Caputo, B.; , "Online-batch strongly convex Multi Kernel Learning," Computer Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on , vol., no., pp.787-794, 13-18 June 2010

- Source code for download (Matlab): Dogma

- Waikato Environment for Knowledge Analysis: Weka

- Article to refer: Mark Hall, Eibe Frank, Geoffrey Holmes, Bernhard Pfahringer, Peter Reutemann, Ian H. Witten (2009); The WEKA Data Mining Software: An Update; SIGKDD Explorations, Volume 11, Issue 1.

- Download (Java): Weka Download

3D point cloud processing

Framework with numerous state-of-the art algorithms including filtering, feature estimation, surface reconstruction, registration, model fitting and segmentation.

- The Point Cloud Library: PCL

- Documentation

- Article to refer: Rusu, R.B., Cousins, S.: 3D is here: Point cloud library (PCL). In: Proc. of the Int. Conf. on Robotics and Automation (ICRA). Shanghai, China (2011)

- Source code for download: http://pointclouds.org/downloads/

- Contact: http://pointclouds.org/contact.html

| Attachment | Size |

|---|---|

| 448.07 KB | |

| 3.17 MB | |

| 1017.82 KB | |

| 39.93 KB | |

| 209.5 KB |