- ImageCLEF 2025

- LifeCLEF 2025

- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

ImageCLEFdrawnUI

Motivation

Building websites requires a very specific set of skills. Currently, the two main ways to achieve this is either by using a visual website builder or by programming. Both approaches have a steep learning curve. Enabling people to create websites by drawing them on a whiteboard or on a piece of paper would make the webpage building process more accessible.

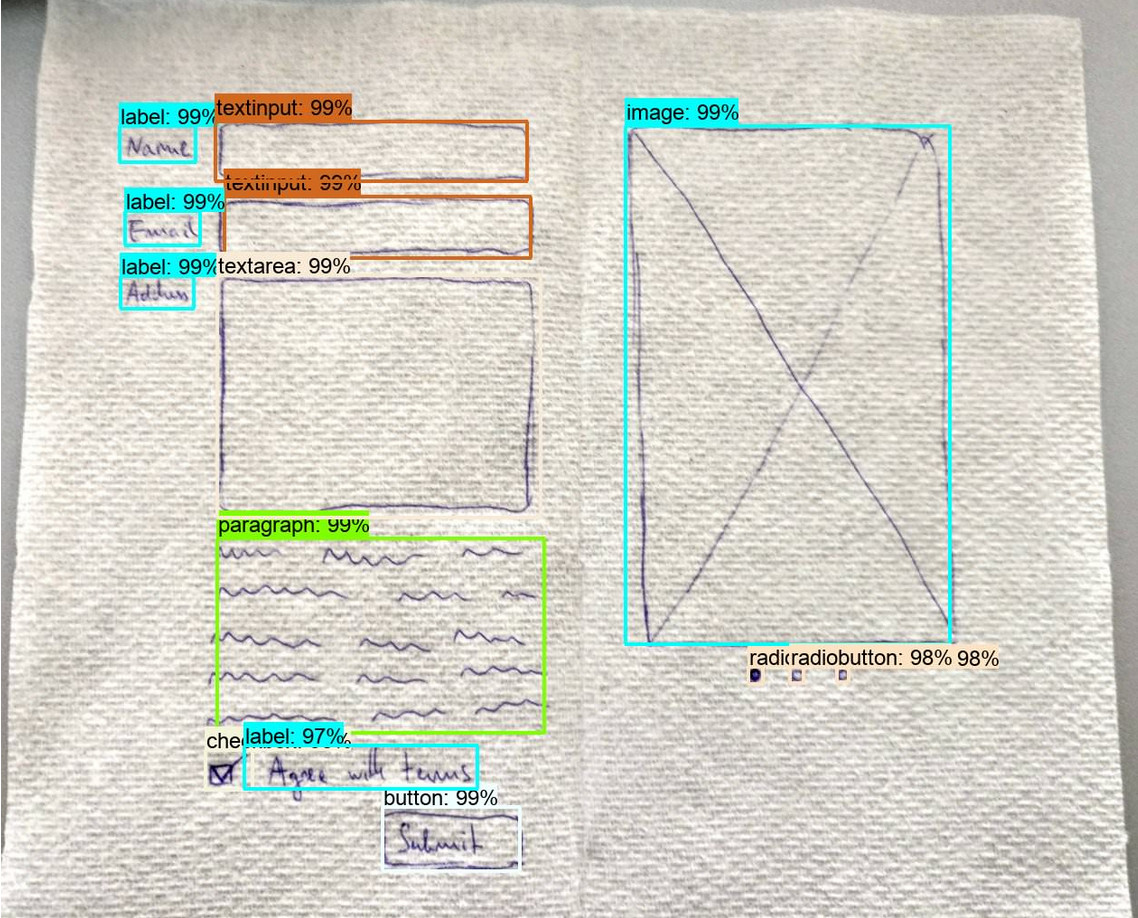

A first step in capturing the intent expressed by a user through a wireframe is to correctly detect a set of atomic user interface elements (UI) in their drawings. The bounding boxes and labels resulted from this detection step can then be used to accurately generate a website layout using various heuristics.

In this context, the detection and recognition of hand drawn website UIs task addresses the problem of automatically recognizing the hand drawn objects representing website UIs, which are further used to be translated automatically into website code.

News

- 30.10.2019: Website goes live

Preliminary Schedule

- 20.12.2019: registration opens for all ImageCLEF tasks

- 13.01.2020: development data release starts

- 16.03.2020: test data release starts

11.05.202005.06.2020: deadline for submitting the participants runs18.05.202012.06.2020: release of the processed results by the task organizers25.05.202010.07.2020: deadline for submission of working notes papers by the participants15.06.202007.08.2020: notification of acceptance of the working notes papers29.06.202021.08.2020: camera ready working notes papers- 22-25.09.2020: CLEF 2020, Thessaloniki, Greece

Task description

Given a set of images of hand drawn UIs, participants are required to develop machine learning techniques that are able to predict the exact position and type of UI elements.

Data

The provided data set consists of 3,000 hand drawn images inspired from mobile application screenshots and actual web pages containing 1,000 different templates. Each image comes with the manual labeling of the positions of the bounding boxes corresponding to each UI element and its type. To avoid any ambiguity, a predefined shape dictionary with 21 classes is used, e.g., paragraph, label, header. The development set contains 2,000 images while the test set contains 1,000 images.

Evaluation methodology

The performance of the algorithms will be evaluated using the standard mean Average Precision over IoU .5, commonly used in object detection.

Participant registration

Please refer to the general ImageCLEF registration instructions

Submission instructions

The submissions will be received through the crowdAI system.

Participants will be permitted to submit up to 10 runs. External training data is not allowed.

Each system run will consist of a single ASCII plain text file. The results of each test set should be given in separate lines in the text file. The format of the text file is as follows:

- [image_ID/document_ID] [results]

The results of each test set image should be given in separate lines, each line providing only up to 300 localised objects. The format has characters to separate the elements, semicolon ‘;’ for the classes, colon ':' for the confidence, comma ',' to separate multiple bounding boxes, and 'x' and '+' for the size-offset bounding box format, i.e.:

[image_ID];[class] [confidence1,1]:][width1,1]x[height1,1]+[xmin1,1]+[ymin1,1],[[confidence1,2]:][width1,2]x[height1,2]+[xmin1,2]+[ymin1,2],...;[substrate2] ...

[confidence] are floating point values 0-1 for which a higher value means a higher score.

References for minimum values are taken in the top left corner of the image.

For example, in the development set format (notice that there are 2 bounding boxes for the class image):

- 2018_0714_112604_057 0 paragraphe 1 891 540 1757 1143

- 2018_0714_112604_057 3 image 1 2724 1368 2825 1507

- 2018_0714_112604_057 4 image 1 2622 1576 2777 1731

In the submission format, it would be a line as:

- 2018_0714_112604_057;paragrahe 0.6:867x 604+891+540;image 0.7:102x140+2724+2825,0.3:156x156+2622+1576

Results

| run id | participant_name | clef_method_description | overall_precision | mAP @ IoU 0.5 | recall @ IoU 0.5 |

|---|---|---|---|---|---|

| 67816 | zip | Reset50, Faster RCNN, fullsize, grayscale | 0.970863755 | 58.28 | 44.5 |

| 68014 | zip | Inception, Resnet v2, faster RCNN, fullsize | 0.956427689 | 69.38 | 51.9 |

| 68003 | zip | Inception Resnet v2, faster RCNN full size grayscale | 0.956130067 | 69.45 | 52 |

| 67814 | zip | Resnet-50, faster RCNN, 12MP grayscale | 0.955690594 | 67.58 | 51.7 |

| 67972 | CudaMemError1 | fusiont-3 | 0.950449341 | 71.53 | 55.6 |

| 67833 | CudaMemError1 | obj wise 2 | 0.950352379 | 68.16 | 53.3 |

| 67710 | CudaMemError1 | resnest101 | 0.949090015 | 64.92 | 50.5 |

| 67413 | dimitri.fichou | baseline: Faster RCNN + data augmentation | 0.947899979 | 57.2 | 40.3 |

| 67991 | zip | Resnet50, faster RCNN, grayscale, fullsize | 0.944426347 | 64.7 | 47.2 |

| 68015 | zip | Inception_Resnet_v2 faster RCNN full sizes, merging confidence 0.45 - best F1 score | 0.941584217 | 75.51 | 55.5 |

| 67391 | OG_SouL | Pre-trained Mask-RCNN on COCO dataset | 0.940476191 | 57.34 | 41.7 |

| 67733 | zip | 0.939230862 | 68.75 | 53.6 | |

| 67722 | CudaMemError1 | resnest101 | 0.934630121 | 72.33 | 58.5 |

| 67706 | CudaMemError1 | - | 0.934373381 | 79.36 | 59.8 |

| 67829 | CudaMemError1 | obj fusion | 0.932995761 | 73.82 | 55.6 |

| 67707 | CudaMemError1 | - | 0.931251081 | 79.24 | 59.4 |

| 67831 | CudaMemError1 | image wise fusion | 0.929878746 | 79.11 | 60 |

| 67699 | OG_SouL | Multi-Pass inference Mask-RCNN model on black and white images | 0.918129682 | 63.73 | 50.1 |

| 67712 | OG_SouL | Multi-Pass Mask-RCNN (IoU > 0.5) | 0.917177163 | 64.12 | 49.6 |

CEUR Working Notes

- All participating teams with at least one graded submission, regardless of the score, should submit a CEUR working notes paper.

- The working notes paper should be submitted using https://easychair.org/conferences/?conf=clef2020 and select track "ImageCLEF - Multimedia Retrieval in CLEF". Add author information, paper title/abstract, keywords, select "Task 4 - ImageCLEFdrawnui" and upload your working notes paper as pdf.

- The working notes are prepared using the LNCS template available at: http://www.springer.de/comp/lncs/authors.html

However, CEUR-WS asks to include the following copyright box in each paper:

Copyright © 2020 for this paper by its authors.

Use permitted under Creative Commons License Attribution 4.0 International (CC BY 4.0).

CLEF 2020, 22-25 September 2020, Thessaloniki, Greece.To facilitate authors, we have prepared a LaTex template you can download at:

https://drive.google.com/file/d/1T-okD-aDIoBHNt1D2CztTcmMGbm80JPG/view?usp=sharing

Citations

When referring to the ImageCLEFdrawnUI 2020 task general goals, general results, etc. please cite the following publication:

- Dimitri Fichou, Raul Berari, Paul Brie, Mihai Dogariu, Liviu Daniel Ștefan, Mihai Gabriel Constantin, Bogdan Ionescu 2020. Overview of ImageCLEFdrawnUI 2020: The Detection and Recognition of Hand Drawn Website UIs Task. In CLEF2020 Working Notes (CEUR Workshop Proceedings). CEUR-WS.org <http://ceur-ws.org>, Thessaloniki, Greece.

-

BibTex:

@Inproceedings{ImageCLEFdrawnUI2020,-

author = {Dimitri Fichou and Raul Berari and Paul Brie and Mihai Dogariu and Liviu Daniel \c{S}tefan and Mihai Gabriel Constantin and Bogdan Ionescu},

title = {Overview of {ImageCLEFdrawnUI} 2020: The Detection and Recognition of Hand Drawn Website UIs Task},

booktitle = {CLEF2020 Working Notes},

series = {{CEUR} Workshop Proceedings},

year = {2020},

volume = {},

publisher = {CEUR-WS.org $<$http://ceur-ws.org$>$},

pages = {},

month = {September 22-25},

address = {Thessaloniki, Greece},

}

When referring to the ImageCLEF 2020 lab general goals, general results, etc. please cite the following publication which will be published by September 2020 (also referred to as ImageCLEF general overview):

- Bogdan Ionescu, Henning Müller, Renaud Péteri, Asma Ben Abacha, Vivek Datla, Sadid A. Hasan, Dina Demner-Fushman, Serge Kozlovski, Vitali Liauchuk, Yashin Dicente Cid, Vassili Kovalev, Obioma Pelka, Christoph M. Friedrich, Alba García Seco de Herrera, Van-Tu Ninh, Tu-Khiem Le, Liting Zhou, Luca Piras, Michael Riegler, Pål Halvorsen, Minh-Triet Tran, Mathias Lux, Cathal Gurrin, Duc-Tien Dang-Nguyen, Jon Chamberlain, Adrian Clark, Antonio Campello, Dimitri Fichou, Raul Berari, Paul Brie, Mihai Dogariu, Liviu Daniel Ștefan, Mihai Gabriel Constantin, Overview of the ImageCLEF 2020: Multimedia Retrieval in Medical, Lifelogging, Nature, and Internet Applications In: Experimental IR Meets Multilinguality, Multimodality, and Interaction. Proceedings of the 11th International Conference of the CLEF Association (CLEF 2020), Thessaloniki, Greece, LNCS Lecture Notes in Computer Science, 12260, Springer (September 22-25, 2020).

-

BibTex:

@inproceedings{ImageCLEF2020,-

author = {Bogdan Ionescu and Henning M\"uller and Renaud P\'{e}teri and Asma Ben Abacha and Vivek Datla and Sadid A. Hasan and Dina Demner-Fushman and Serge Kozlovski and Vitali Liauchuk and Yashin Dicente Cid and Vassili Kovalev and Obioma Pelka and Christoph M. Friedrich and Alba Garc\'{\i}a Seco de Herrera and Van-Tu Ninh and Tu-Khiem Le and Liting Zhou and Luca Piras and Michael Riegler and P\aa l Halvorsen and Minh-Triet Tran and Mathias Lux and Cathal Gurrin and Duc-Tien Dang-Nguyen and Jon Chamberlain and Adrian Clark and Antonio Campello and Dimitri Fichou and Raul Berari and Paul Brie and Mihai Dogariu and Liviu Daniel \c{S}tefan and Mihai Gabriel Constantin},

title = {{Overview of the ImageCLEF 2020}: Multimedia Retrieval in Medical, Lifelogging, Nature, and Internet Applications},

booktitle = {Experimental IR Meets Multilinguality, Multimodality, and Interaction},

series = {Proceedings of the 11th International Conference of the CLEF Association (CLEF 2020)},

year = {2020},

volume = {12260},

publisher = {{LNCS} Lecture Notes in Computer Science, Springer},

pages = {},

month = {September 22-25},

address = {Thessaloniki, Greece}

}

Organizers

- Paul Brie <paul.brie(at)teleporthq.io>, teleportHQ, Cluj Napoca, Romania

- Dimitri Fichou <dimitri.fichou(at)teleporthq.io>, teleportHQ, Cluj Napoca, Romania

- Mihai Dogariu, University Politehnica of Bucharest, Romania

- Liviu Daniel Ștefan, University Politehnica of Bucharest, Romania

- Mihai Gabriel Constantin, University Politehnica of Bucharest, Romania

- Bogdan Ionescu, University Politehnica of Bucharest, Romania

| Attachment | Size |

|---|---|

| 310.35 KB |