- ImageCLEF 2025

- LifeCLEF 2025

- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

LifeCLEF 2018

Results Publication

The overview paper summarizing the results of all LifeCLEF 2018 challenges is available: HERE (pdf)

Individual working notes of tasks organizers and participants can be found within CLEF 2018 CEUR-WS proceedings.

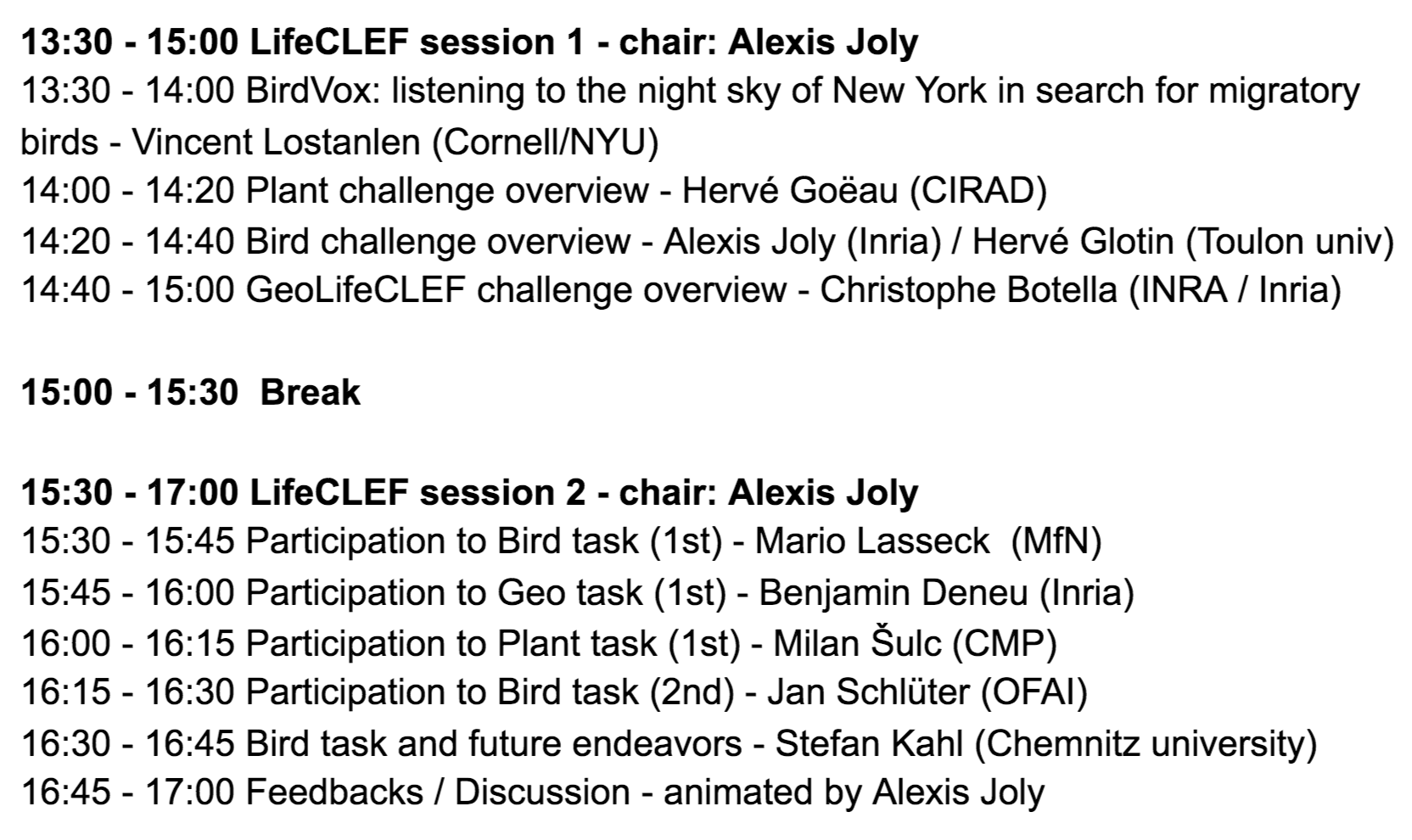

LifeCLEF 2018 Workshop Program

The full program of CLEF conference is available here.

Schedule

- 30th Nov 2017: registration opens for all LifeCLEF tasks (until 30.04.2018)

- Jan 2018 - February 2018: training data release

- March - April 2018: test data release

- 8th May 2018: deadline for submission of runs by the participants

- 15th May 2018: release of processed results by the task organizers

- 31th May 2018: deadline for submission of working notes papers by the participants

- 10th-14th Sept 2018: CLEF 2018 Avignon, France

Motivation

Building accurate knowledge of the identity, the geographic distribution and the evolution of living species is essential for a sustainable development of humanity as well as for biodiversity conservation. Unfortunately, such basic information is often only partially available for professional stakeholders, teachers, scientists and citizens, and often incomplete for ecosystems that possess the highest diversity. A noticeable cause and consequence of this sparse knowledge is that identifying living plants or animals is usually impossible for the general public, and often a difficult task for professionals, such as farmers, fish farmers or foresters and even also for the naturalists and specialists themselves. This taxonomic impediment was actually identified as one of the main ecological challenges to be solved during Rio’s United Nations Conference in 1992. In this context, an ultimate ambition is to set up innovative information systems relying on the automated identification and understanding of living organisms as a mean to engage massive crowds of observers and boost the production of biodiversity and agro-biodiversity data.

The LifeCLEF 2018 lab proposes three data-oriented challenges related to this vision, in the continuity of the previous editions of the lab, but with several consistent novelties intended to push the boundaries of the state-of-the-art in several research directions at the frontier of information retrieval, machine learning and knowledge engineering.

CLEF Conference and working notes

LifeCLEF lab is part of the Conference and Labs of the Evaluation Forum: CLEF 2018. CLEF 2018 consists of independent peer-reviewed workshops on a broad range of challenges in the fields of multilingual and multimodal information access evaluation, and a set of benchmarking activities carried in various labs designed to test different aspects of mono and cross-language Information retrieval systems. More details about the conference can be found here. Also there is more information about the Clef Initiative.

Submitting a working note with the full description of the methods used in each run is mandatory. Any run that could not be reproduced thanks to its description in the working notes might be removed from the official publication of the results. Working notes are published within CEUR-WS proceedings, resulting in an assignment of an individual DOI (URN) and an indexing by many bibliography systems including DBLP. According to the CEUR-WS policies, a light review of the working notes will be conducted by LifeCLEF organizing committee to ensure quality. As an illustration, LifeCLEF 2017 working notes (task overviews and participant working notes) can be found within CLEF 2017 CEUR-WS proceedings.

Registration and data access

- Each participant has to register on (https://www.aicrowd.com) with username, email and password. A representative team name should be used

as username. -

In order to be compliant with the CLEF requirements, participants also have to fill in the following additional fields on their profile:

- First name

- Last name

- Affiliation

- Address

- City

- Country

-

Participants can now access the dataset tab on the challenge's page. A LifeCLEF participant is considered as registered for a task as soon as he/she has downloaded a file of the task's dataset via the dataset tab of the challenge.

Registrations are handled one a per-task basis. This means if a task has multiple challenges (subtasks), a participant can automatically access the data of all challenges in that task. There is one dataset per task, meaning we do not separate datasets on a per-challenge basis.

Contact

Coordination

- Alexis Joly, INRIA Sophia-Antipolis - ZENITH team, LIRMM, University of Montpellier, France, alexis.joly(replace-by-an-arrobe)inria.fr

- Henning Müller, University of Applied Sciences Western Switzerland in Sierre, Switzerland, henning.mueller(replace-by-an-arrobe)hevs.ch

GeoLifeCLEF

- Christophe Botella, Inra – AMAP, Montpellier, France, Christophe Botella

- Alexis Joly, INRIA Sophia-Antipolis - ZENITH team, LIRMM, University of Montpellier, France, alexis.joly(replace-by-an-arrobe)inria.fr

- Pierre Bonnet, Cirad – AMAP, Montpellier, France, pierre.bonnet(replace-by-an-arrobe)cirad.fr

BirdCLEF

- Hervé Glotin, University of Toulon, France, glotin(replace-by-an-arrobe)univ-tln.fr

- Hervé Goëau, Cirad - AMAP, Montpellie, France.

- Willem-Pier Vellinga, Xeno-Canto foundation for nature sounds, The Netherlands, wp(replace-by-an-arrobe)xeno-canto.org

- Alexis Joly, INRIA Sophia-Antipolis - ZENITH team, LIRMM, University of Montpellier, France, alexis.joly(replace-by-an-arrobe)inria.fr

ExpertLifeCLEF

- Hervé Goëau, Cirad - AMAP, Montpellier, France.

- Alexis Joly, INRIA Sophia-Antipolis - ZENITH team, LIRMM, University of Montpellier, France, alexis.joly(replace-by-an-arrobe)inria.fr

- Pierre Bonnet, Cirad – AMAP, Montpellier, France, pierre.bonnet(replace-by-an-arrobe)cirad.fr

- Concetto Spampinato, University of Catania, Italy, cspampin(replace-by-an-arrobe)dieei.unict.it

Credits

- Ivan Eggel, University of Applied Sciences Western Switzerland, Sierre, Switzerland, ivan.eggel(replace-by-an-arrobe)hevs.ch