- ImageCLEF 2025

- LifeCLEF 2025

- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

Visual Concept Detection and Annotation Task 2011

News

The test collection of the Visual Concept Detection and Annotation Task 2011 is now freely available here.

Overview

The visual concept detection and annotation task is a multi-label classification challenge. It aims at the automatic annotation of a large number of consumer photos with multiple annotations.

The task can be solved by following three different approaches:

1) Automatic annotation with visual information only

2) Automatic annotation with Flickr user tags (tag enrichment)

3) Multi-modal approaches that consider visual information and/or Flickr user tags and/or EXIF information

In all cases the participants are asked to annotate the photos of the test set with a predefined set of keywords (the concepts). This defined set of keywords allows for an automatic evaluation and comparison of the different approaches.

We pose two subtasks:

- Annotation Task:

The annotation benchmark will be performed on a fully assessed test collection similar as in the last years. The participants are asked to annotate a test set of 10,000 images with 99 visual concepts. Therefore an annotated training set of 8,000 images is provided. The evaluation will be conducted by the interpolated Average Precision and the example-based F-measure.

- Concept-based Retrieval Task (NEW!):

The second challenge is a concept-based retrieval task and will be performed on a set of 200,000 images from the 1 million MIR Flickr dataset. We will pose 40 topics which consist of a logical connection of concepts from the annotation task. To give an example, we could ask to "Find all images that depict a small group of persons in a landscape scenery showing trees and a river on a sunny day."

The training set of the annotation task (8,000 images annotated with 99 visual concepts) can be used to train the concept detectors. The participants are asked to submit (up to) the 1,000 most relevant photos for each topic in ranked order. The assessment will be performed in a pooling scenario using Average Precision. Eventually we may employ an inferred variant of AP. Depending on the number of submissions the pools may include just one run per group (the primary run).

The participants can choose a task or participate in both.

Announcement: CVIU special issue on visual concept detection

In case you are interested in accessing the data for the CVIU special issue on concept detection, please contact me at research[at]stefanie-nowak.de

_____________________________________________________________________________________________________________________

Visual Concepts

The image set is annotated with concepts that describe the scene (indoor, outdoor, landscape....), depicted objects (car, animal, person...), the representation of image content (portrait, graffiti, art), events (travel, work...) or quality issues (overexposed, underexposed, blurry...).

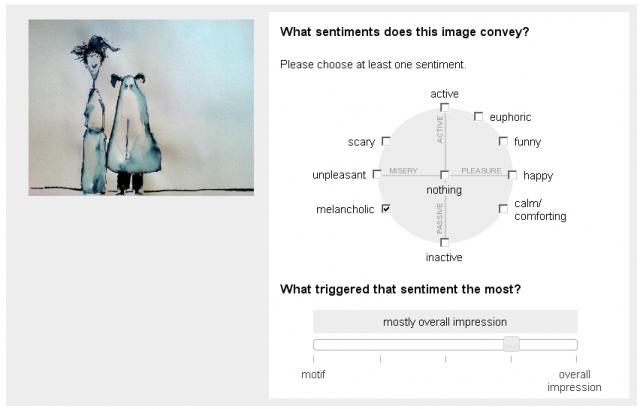

This year a special focus is laid to the detection of sentiment concepts. We follow the approach of Russell, who defines eight affect concepts in circular order and adapted it slightly. Further, we introduce a concept "funny" which is not included in the original model. The sentiment concepts are annotated in a crowdsourcing approach by asking the turkers what sentiments are conveyed by an image.

Annotation of sentiment concepts

Annotation of sentiment concepts

How to register for the task

Due to the database restrictions, it is necessary to sign a user agreement to get access to the data. You can find the license agreement here. Please print it, sign it and send a scanned copy or a fax to Henning (see also the instructions page in the document).

ImageCLEF also has its own registration interface. Here you can choose an user name and a password. This registration interface is for example used for the submission of runs. If you already have a login from the former ImageCLEF benchmarks you can migrate it to ImageCLEF 2011 here

_____________________________________________________________________________________________________________________

Data Sets

The task uses a subset of the MIR Flickr 1 million image dataset for the annotation challenge. The MIR Flickr collection supplies all original tag data provided by the Flickr users (further denoted as Flickr user tags). These Flickr user tags are made available for the textual and multi-modal approaches. For most of the photos the EXIF data is included and may be used. The annotations are provided as plain txt files.

Training Set: Annotation Task

The training set for the annotation task is now available! It consists of 8,000 photos annotated with 99 visual concepts , the Photo Tagging Ontology, EXIF data and Flickr user tags. Further we extracted basic RGB SIFT features with dense sampling and standard parameters in text format using the Color Descriptor Software from the University of Amsterdam. (Command: ./colorDescriptor image.jpg --detector densesampling --descriptor rgbsift --output output.txt) For further information on the format or other colour descriptors please have a look at the ReadMe. The same features will be provided for the testset of the annotation task.

The training set including metadata can be downloaded here.

The features can be downloaded here.

DOWNLOAD only for registered participants who signed the license agreement. The login and password are listed in the "Detail" view of the collection in the ImageCLEF registration system.

Test Set: Annotation Task

The test set for the annotation task is now available. It consists of 10,000 photos with EXIF data and Flickr user tags.

The test set including metadata can be downloaded here.

DOWNLOAD only for registered participants who signed the license agreement. The login and password are listed in the "Detail" view of the collection in the ImageCLEF registration system.

Dataset: Concept-based Retrieval Task

The dataset for the concept-based Retrieval Task consists of the 200,000 Flickr images stored in images2.tar and images3.tar of the 1 Million Flickr Set. Please see the instructions on how to download the data.

The data is available here and here.

The exif data can be found here and here.

The Flickr user tags are available here and here.

Please note that you are allowed to use the training set of the annotation task to train the concept detector for the retrieval task.

DOWNLOAD only for registered participants who signed the license agreement. The login and password are listed in the "Detail" view of the collection in the ImageCLEF registration system.

_____________________________________________________________________________________________________________________

Topics: Concept-based Retrieval Task

The topics for the concept-based retrieval task are based on the Wikipedia task topics from 2009-2011 and adapted to the characteristics of the collection. The wikipedia topics were developed by using query logs and involving participants in the definition. Please have a look at the description of the wikipedia task for more information on topic development.

The topics consist of a textual description of the information need as well as up to five example images that are taken from the training set of the photo annotation task. The format is similar to the one of the wikipedia task and is exemplary presented in the following:

<topic>

<number> 1 </number>

<title>landscape images</title>

<image> landscape.jpg </image>

<narrative>Find images depicting a landscape scenery. Pictures that include man-made objects like buildings, bridges or vehicles are not relevant.</narrative>

</topic>

The topics can now be downloaded here.

DOWNLOAD only for registered participants who signed the license agreement. The login and password are listed in the "Detail" view of the collection in the ImageCLEF registration system.

_____________________________________________________________________________________________________________________

Submissions

Participants may submit up to 5 runs for the photo annotation task and up to 10 runs for the concept-based retrieval task. Participation in one or both tasks are possible. Please note that it is essential to denote the primary run for the concept-based retrieval task. This run will definitely contribute to the pool in relevance assessment.

The submission system will open by 13.05.2011.

Submission Format: Annotation Task

The submission format is equal to the annotation format of the training data, except that you are expected to give some confidence scores for each concept to be present or absent. That means, you have to submit a file containing the same number of columns, but each number can be an arbitrary floating point number between 0 and 1 , where higher numbers denote higher confidence in the presence of a particular concept. Please submit your results in one txt file for all results.

For the example-based evaluation measure, we need a binary mapping of the confidence scores to 0 or 1. Please append binary annotations for all concepts and images below the confidence values.

So, the submission file should look like:

imageID00001.jpg 0.34 0.67 0.78 .... 0.7 (altogether 99 float values between 0 and 1)

...

imageID10000.jpg 0.34 0.67 0.78 .... 0.7 (altogether 99 float values between 0 and 1)

imageID00001.jpg 0 1 1 0 1 1 1 1 .... 1 (altogether 99 binary values, 0 or 1)

...

imageID10000.jpg 0 1 1 1 1 0 0 1 .... 0 (altogether 99 binary values, 0 or 1)

The submission system performs automatic checks if the submission file is correct. In case not, you will get an error message during upload.

Please note that we restrict the number of runs per group to maximal 5 submissions.

Submission Format: Concept-based Retrieval Task

Submission follows the standard TREC format. For each topic, (up to) the 1000 most relevant images should be submitted. A *space* is used as delimiter between columns. Each run includes the answers for all topics. Different runs are uploaded in separate files.

Example:

25 1 im99156.jpg 0 4238 xyzT10af5

25 1 im11284.jpg 1 4223 xyzT10af5

25 1 im16998.jpg 2 4207 xyzT10af5

25 1 im15001.jpg 3 4194 xyzT10af5

... etc

where:

(1) The first column is the topic number

(2) The second column is not used in ImageCLEF 2011 and should be set to 1.

(3) The third column is the official image id of the retrieved image. In our case it equals the filename of the image.

(4) The fourth column is the rank position (**starting from 0**).

(5) The fifth column shows the score (integer or floating point) that generated the ranking. This score MUST be in descending (non-increasing) order and is important to include so that we can handle tied scores (for a given run) in a uniform fashion (the evaluation routines rank documents from these scores, not from your ranks).

(6) The sixth column is called the "run tag" and should be a unique identifier for your group AND for the method used. That is, each run should have a different tag that identifies the group and the method that produced the run.

_____________________________________________________________________________________________________________________

Results: Annotation Task

The results for the Photo Annotation Task 2011 are now available. We had submissions of 18 groups with altogether 79 runs. In total there were 46 submissions which only use visual information, 8 submissions which only use textual information and 25 submissions utilising multi-modal approaches.

We applied three measures to determine the quality of the annotations. One for the evaluation per concept and two for the evaluation per photo. The evaluation per concept was performed with the Mean interpolated Average Precision (MAP). The evaluation per example was performed with the example-based F-Measure (F-ex) and the Semantic R-Precision (SR-Precision). For the evaluation per concept the provided confidence annotation scores were used, while the evaluation per example considered the binary annotation scores (F-ex) and the ranked predictions (SR-Precision).

On the following sites you can find the results:

* MAP Results

* Results for Example-based Evaluation

The evaluation script for the interpolated AP can be found here.

Results: Concept-based Retrieval Task

The results for the concept-based retrieval task are now available. In total, 4 groups participated with 31 runs. 7 runs have been submitted in the textual configuration, 14 runs use only visual information, and 10 runs consider multi-modal information.

Further, the 31 runs can be splitted into 15 runs that consider manual input and 16 runs that are completely automated. The runs denoted with "manual" use a manual intervention to construct the query out of the narrative of the topics, e.g. by deriving a boolean connection of concepts. After query formulation these runs also retrieve images in an automated manner.

The result table can be found here.

_____________________________________________________________________________________________________________________

Tentative Schedule

- 31.01.2011: registration opens for all CLEF tasks

- 01.04.2011: training data and concepts release for annotation task

- 01.04.2011: data release for concept-based retrieval task

- 01.05.2011: test data release for annotation task

- 02.05.2011: topic release for concept-based retrieval task

- 15.05.2011: registration closes for all ImageCLEF tasks

- 13.06.2011: submission of runs --> extension to 19.06.2011!

- 15.07.2011: release of results

- 14.08.2011: submission of working notes papers

- 19.09.2011-22.09.2011: CLEF 2010 Conference, Amsterdam, The Netherlands.

_____________________________________________________________________________________________________________________

Organizers

Stefanie Nowak, Fraunhofer Institute for Digital Media Technology IDMT, Ilmenau, Germany

Judith Liebetrau, Fraunhofer Institute for Digital Media Technology IDMT and Technical University of Ilmenau, Ilmenau, Germany

Contact

Dr. Uwe Kühhirt, Fraunhofer Institute for Digital Media Technology IDMT, Ilmenau, Germany, uwe.kuehhirt[at]idmt.fraunhofer.de